Reading Notes

Preamble

This page contains my reading notes on most important articles I've read since 2015. I take notes on articles I want to remember.

You can click on a tag to show only notes with that tag, and click the tag again to show all notes.

Most of the time, first-person pronouns in my notes refer to the author of the book/article, not to me. Lines preceded by "me:" give my own thoughts.

Disclaimers:

- I primarily write these notes for my own benefit, so some parts might not make sense. But I hope you find something useful in them.

- Unless otherwise specified, these notes represent my interpretation of the authors' views, not my own views. But also I can't promise that I understood the authors' views correctly.

- I exported my notes from org-mode to HTML with minimal editing. Some things might not look right.

Readings

Deep Research: Foot-in-the-Door Regulations policy causepri

https://chatgpt.com/share/6862f83b-9658-8011-8898-afacecbe8390

Key question: Do weak regulations make it easier to pass strong regulations later (via the foot-in-the-door effect), or do they make it harder (by reducing political will)?

me: The evidence cited doesn't look particularly strong and I didn't go into much depth so I don't take any of this as strong evidence

Foot-in-the-door hypothesis

- A weak policy keeps attention on the issue

- Environmental regulations have followed "soft law to hard law" trajectory

- ex: Vienna Convention provided groundwork to reduce CFCs but no strong regulation. Later MontrealProtocol required CFCs be phased out

- Weak policies expand the Overton window

- this was the original use case for the term "Overton window"

- Same-sex marriage started with weak or regional laws which became stronger over time

- Passing regulations creates new constituencies

- ex: renewable energy subsidies create groups with a financial interest in advocating for stronger environmental regulations

- Winning Coalitions for Climate Policy (2015): regulations can create positive feedback by strengthening coalitions

- Policy sequencing can increase public support for ambitious climate policy (2023) – initial weak policies increased support among skeptical parties when they perceived the policy to be effective

- me: Various examples show weak-to-strong policies over a 10–20 year time span

Weak regulations hindering future reforms

- Weak policies could give the impression that the problem is solved

- ex: Tobacco companies lobbied for weak state-level that would preempt stronger municipal ordinances (2012)

- me: I don't think this is relevant b/c the point of the state laws was to cancel out stronger laws that already existed

- More relevantly, weak state laws reduced public support among smokers for strong anti-smoking policies

- Policies to establish regulatory bodies might let those bodies become captured by corporate interests

- me: this is part of why I don't like the idea of putting AI company employees on regulatory bodies, and I am skeptical of pushing for more "government expertise"

- Saul Levmore argues that incrementalism encourages regulated interest groups to lobby for its competitors to be similarly regulated

- me: I don't think this is much of a downside for AI x-risk

- Harvard Law Review (2018): it may be better to have no regulations at all, because that makes it harder for anti-regulation parties to argue against passing regulations

Where does each pattern prevail?

- me: This is GPT's synthesis but it looks right to me

- Incrementalism is more likely to work for issues with high public salience

- Incrementalism is more likely to work if it grows/strengthens the pro-regulation coalition

- Weak policies create positive feedback when they have visible positive effects

- Weak policies should be written with future strengthening in mind: sunset provisions, periodic review requirements, or giving agencies authority to strengthen their rules

Caplan: Myopic Empiricism of the Minimum Wage economics

https://www.econlib.org/archives/2013/03/the_vice_of_sel.html

Some good empirical research suggests no effect of minimum wage on unemployment, but (a) I have a strong prior that demand curves are downward-sloping, and (b) if you look at evidence that isn't directly about minimum wage, it supports a disemployment effect:

- Strong consensus that low-skilled immigration has little effect on low-skilled wages, which implies nearly-horizontal labor demand (and therefore large effect of raising minimum wage)

- European labor market regulation research suggests that regulation increases unemployment. If raising the cost of employment increases unemployment, then so should raising minimum wage

- Price floors are known to create surpluses in general

- Keynsian economics says sticky wages cause unemployment, i.e. wages being higher than the equilibrium price causes unemployment. Minimum wage would also produce wages higher than the equilibrium price

My thoughts

- I generally form economic beliefs based on (1) econ 101 predictions and (2) surveys of economist opinion

- Minimum wage is the only issue (AFAIK) where economist opinion deviates from econ 101

- There is not a consensus among economists but the ~median position is something like "it's plausible that minimum wage does not cause unemployment"

- As I understand, economists' views mainly come from empirical studies showing no effect of minimum wage on unemployment

- Caplan makes a strong case that if you look at a wider array of empirical evidence, then it mostly supports the claim that minimum wage causes unemployment

- Therefore, I am reasonably confident that the minimum wage does, in fact, cause unemployment, even though I'm disagreeing with the median economist

Deep Research: How to make an international treaty happen ai causepri policy xrisk

Steps

- Agenda-setting and coalition-building. Core group agrees on the need for regulation

- Diplomatic dialogues, e.g. Bletchley Park summit

- Formal negotiations.

- me: this section was kinda useless tbh

Timeline

- Pandemic preparedness treaty has been in ongoing discussion for 3 years

- Nuclear non-proliferation treaty was in negotiations for three years (1965–1968)

- 1987 Montreal Protocol (to protect the ozone layer) was negotiated in two years

- Add 1–2 years for countries to ratify the treaty

Historical precedents

- Nuclear arms control – Partial Test Ban Treaty (1963) and Non-Proliferation Treaty (1968). There is a still-pending Comprehensive Test Ban Treaty (1996)

- Biological Weapons Convention (1972) and Chemical Weapons Convention (1993) ban the development of biological and chemical warfare agents

- Convention on Biological Diversity (2010) put a moratorium on geoengineering

- Asilomar Conference (1975) – biologists voluntarily agreed to halt certain gene-splicting experiments

Roles for non-profits

- International Campaign to Ban Landmines (a coalition of non-profits) played an important role in getting landmines banned

- me: this section was also kinda useless

Bentham's Bulldog: Halstead's climate change report causepri xrisk

Substack, EA Forum, Halstead report

- me: I am not distinguishing whether claims are being made by Bentham or Halstead. Bentham mostly repeats Halstead's claims but adds a few of his own claims on top

- me: From spot checking, I found a high rate of inaccuracies in Bentham's summary, so these notes might not be useful. I might need to actually read Halstead's report

Introduction

- Best guess is chance of existential catastrophe is 1 in 100,000, and Halstead struggles to get the risk above 1 in 1000

[ ]me: Halstead says the above (pg 8) but also says he struggles to get the risk above 1 in 100,000 (pg 7)

- Climate change is likely to increase wild animal suffering

How much warming will there be?

- Early climate projections were too pessimistic because renewable energy has gotten much cheaper than predicted

- 3,000 Integrated Assessment Models predicted the cost of solar would decline by at most 6% per year 2010–2020. In fact it declined 15% per year

- Countries representing 2/3 of global emissions have committed to net-zero by 2050

- On current policy, by 2100 we will most likely have 2.7° C of warming. 5% chance of more than 3.5° C. This implies well below 1% chance of more than 6° C

[ ]me: This looks like a within-model prediction. What about model error? past projections were pretty inaccurate- relevant: slideshow by Halstead

- This author puts significantly higher credence on extreme warming scenarios by arguing that we shouldn't have much credence on climate models or economic forecasts

How past warming periods have gone

- In Permian extinction, volcanic activity released huge amounts of CO2 and other gases. But extinction probably wasn't primarily caused by CO2

- In geological record, high CO2 is correlated with abundance, not extinction

- During Mid-Cretaceous period, temperatures were 20° C warmer than pre-industrial levels, with CO2 between 500ppm and 1000ppm

[ ]me: how confident are we about these numbers?

- But anthropogenic warming will be unusually rapid

Agriculture

- To cause dramatic mass starvation, climate change would have to offset the increase in crop productivity

- Based on a meta-analysis plus some other studies, Halstead estimates 2° C of warming would cause global crop production to drop by ~0.1%

- Even 9.5° C warming—basically the highest reasonable level even if we burn through all fossil fuels—would not destroy global agriculture

[ ]me: how confident are we about (1) quantity of fossil fuels, (2) how much warming there would be, (3) the effect on agriculture?

Ecosystem collapse and threats to agriculture

- Since 1900, vertebrate species have been going extinct at ~3x the background rate

- me: Vertebrate species don't seem relevant because food crops don't depend on them

- At the rate of species loss since 1980, it would take 18,000 years to qualify as a mass extinction

- me: What if the rate of species loss accelerates? Which presumably it will

- Forest cover has been increasing, not decreasing

- me: This is not consistent with the cited evidence, the rate of decrease has declined but it's still decreasing (Figure 1)

- Eurasia (and especially England) have had enormous loss of biodiversity for the last thousand years, but agricultural production increased enormously

- me: This seems inconsistent with the claim that it will take 18,000 years to qualify as a mass extinction

Heat stress and sea level rise

- One pessimistic estimate found that warming could increase global mortality by 1% due to heat stroke. This is bad but not an existential threat

- Sea level rise is likewise not an existential threat. Will likely displace ~100,000 people per year

Tipping points

- Various proposed feedback loops seem unlikely

- Biggest concern is cloud feedback: warming causes more clouds to form, which traps in more heat. Cloud feedbacks are the main reason why, in the past, climate was more sensitive to warming at higher temperatures. This is the most likely scenario for extreme warning (~13° C), but still extremely unlikely

- And 13° C historically hasn't caused mass extinction, but would still pose non-trivial existential risk

- Warming triggers more warming, but not by enough to cause a runaway effect

- If runaway warming were possible, it would have happened during past eons when temperatures and CO2 levels were far higher

- BOTEC suggests 1 in 500,000 chance of burning all fossil fuels -> 1 in 6 chance of at least 9.6° C. Implies direct risk of extinction is 1 in 3 million

[ ]me: This result almost entirely depends on putting an extremely tiny chance on an event that has nothing to do with climatology. Which seems weird[ ]me: This calculation should integrate over both estimates instead of combining two point estimates. What is the chance we burn 50% of fossil fuels, and what is the chance that that produces 9.6° C of warming?

Economic costs

- Various papers estimate that climate change will reduce GDP by a total of ~5% (with low variance between papers)

Migration

- Today ~23 million people per year are displaced by weather. Most displaced people stay within national borders

- Sea level rise is estimated to displace a total of 17–72 million people in the 21st century

- Halstead reviewed ~30 studies which suggest that the number of migrants will likely increase by 10% on the high end

- me: there is high between-study variance, see box plots

Conflict

- Historically, cooling periods, not warming periods, were associated with international strife

- But we should be hesitant to extrapolate from history

- me: Seems like warming was good historically b/c it was hard to grow crops. Today's warming is different

- IPCC's takeaways:

- Historically, climate change has not increased interstate conflicts

- Climate change increases the odds of civil conflict, largely due to decreased crop yield or perhaps water scarcity

- Historically, climate change has been a small driver of civil conflict relative to other factors

- The future effect is unclear

- me: The report cites evidence about historical conflicts but I'm skeptical that that matters much because most climate change hasn't happened yet

- Halstead estimates that at the high end, warming-related conflicts will cause an extra 40,000 deaths by 2100

- me: I don't see where report says that. I see "This suggests that battle deaths will increase to 40,000 by 2100, other things equal" (pg 389)

Mechanisms that could cause a great power war

- Water wars – These are rare, and water scarcity is more likely to lead to cooperation than conflict. The only recorded incident of an outright water war was 4500 years ago

- Economic costs – We already saw these are unlikely to be catastrophically large

- Civil conflict – As we've seen, the risk of civil conflict is non-trivial, but unlikely to be catastrophic

- me: When did we see that? Bentham's summary didn't say anything about catastrophic risk from civil conflict

- Spurring mass migration – Climate change is likely to have limited impact on migration

80K: Improving China-Western coordination on GCRs (2023) ai causepri policy

Which topics are most important to understand?

- Attitudes toward doing good

- How does professional networking work?

- Attitudes toward philanthropy

Why we don't want to do 'outreach' in China

- 80K made mistakes in its early days in the UK and US, which have been difficult to unwind. People still associate 80K with earning to give

- Broad-based outreach is risky because it's hard to reverse. First impressions are sticky

- China is especially risky because the government is wary of NGOs doing grassroots outreach. If an org is blacklisted, then that's a nearly irreversible setback

- It's easy to accidentally promote an unhelpful message because communication works very differently than in the West

- Much Western messaging doesn't even make sense in China, e.g. it's non-trivial to donate to global poverty because CCP prohibits international nonprofits from fundraising in China

How can you start a career in this area?

- Teach English in China

- Work at a big Chinese company or in the Chinese office of a big Western company

- Work in philanthropy in China

- Do journalism about China-relevant issues

- Study at a Chinese university

Sebo: A Theory of Change for Animal and AI Welfare causepri

https://www.youtube.com/live/Mb7uRki3AqM&t=1h47m

- These issues are urgent. Soon we may lock ourselves in to a bad trajectory

- But we know little about what would benefit wild animals or AIs, and there is little political will

- We have no idea where to go, and we need to start heading there now

- We should follow a dual-track approach: take steps that

- constitute progress for some — directly help some non-humans

- build momentum for all — help generate knowledge, capacity, and political will, so that our future actions will be more effective

- We are already taking steps to end factory farming in the next 50–100 years, even though the goal is a long way away. We know how to use a dual-track approach

- Cage-free campaigns (1) help layer hens now; and (2) help us get knowledge about how to effect change; increase advocates' capacity; and build political will for further reforms

- At NYU's Wild Animal Welfare program, we have proposed cities incorporate low-hanging wild animal considerations in environmental planning, e.g. bird-safe glass. Bird-safe glass isn't the most important issue, but it's progress for some birds, and it brings wild animal welfare into the conversation

- With invertebrates and AI, we have a foundational issue: fundamental disagreement about whether their welfare matters

- We hope the New York Declaration on Animal Consciousness will provide a foundation to raise concern for invertebrate welfare

- Not all steps will turn out to be helpful. But if you're building momentum, then you can course-correct with future steps

- We shouldn't perpetually wait until we get more knowledge. There's so much to know that we could delay forever; and taking first steps helps us get that knowledge faster

lukeprog: comment on Thoughts on the Singularity Institute (2012) causepri rationality

- I don't have prior management experience

- The solution to almost every problem has been (1) read what experts say and (2) consult with people who have solved it before

- When I called our Advisors, most of them said "Oh, how nice to hear from you? Nobody at MIRI has ever asked me for advice before!"

- A bunch of improvements I made came from Nonprofit Kit for Dummies

Deep Research: AI x-risk legislation xrisk causepri policy

https://claude.ai/public/artifacts/ca285229-346e-44c7-872e-c12c928297de https://claude.ai/public/artifacts/8aca8aef-1fa1-4314-9f60-f9284b69d737

list of bills was collected by Claude; my notes are from the official bill summaries

US – Global Catastrophic Risk Management Act of 2022 (S. 4488)

- The only enacted law that addresses x-risks

- Incorporated into H.R.7776

- Establishes a committee to assess global catastrophic risks and existential risks

- Govt must produce a Global Catastrophic Risk Assessment every 10 years (the most recent report was temporarily withdrawn (??))

US – National AI Commission Act of 2023 (H.R. 4223)

- Introduced but no further action

- Establishes a commission to mitigate AI risks

- Commission must recommend a comprehensive, binding regulatory framework

- The bill text (it's short) does not specify the nature of AI risks

- me: This is potentially a good bill. My main concern is the commission would under-rate x-risk and its proposed regulation would end up not helping with x-risk while also preventing more x-risk-relevant regulation from being implemented

US – AI Research, Innovation, and Accountability Act of 2024 (S. 3312)

- Passed committee and then stalled

- No summary but from skimming the bill, looks like it's largely about present-day AI risks, e.g. it mandates that AI-generated text on a website must be labeled as such

- Requires AI orgs to perform risk management assessments before making their systems publicly available

US – AI Foundation Model Transparency Act of 2023 (H.R. 6881)

- Dead

- Requires companies to disclose training data and methodology for foundation models

US – Preserving American Dominance in AI Act of 2024 (S. 5616)

- Referred to commerce committee in December 2024; no action since then

- Bipartisan sponsors

- Establishes AI Safety Review Office under Department of Commerce

- Mandatory reporting on safety testing, mitigation, and cybersecurity procedures

- Within 1 year of bill passing, the Office shall develop binding standards for evals and safety review, in coordination with certain other departments (Energy, Homeland Security, NIST, NSA, etc.)

UK – forthcoming bill

- July 2024 King's Speech announced intention to impose legal requirements like mandatory safety testing and requirements to share safety test data with the UK AI Safety Institute

- No bill has yet been introduced

- me: The fact that they think they can get companies to abide by this is promising for the possibility of UK regulation

California – CalCompute: foundation models: whistleblowers (SB 53)

- Proposed but text not yet written

- Declares the intent of the legislature to enact legislation to establish safeguards for AI frontier models

- me: I think what this is trying to say is that in the future, this bill text will establish safeguards for AI frontier models, but the text has not been written yet

- Sponsored by Encode, Economic Security Action CA, and Secure AI

EU – EU AI Act (Regulation 2024/1689)

- Mandates risk assessment and mitigation

- Model evaluations

- Incident tracking and reporting to AI Office

- AI Office may evaluate general-purpose AI systems to assess compliance

US – Preserving American Dominance in Artificial Intelligence Act of 2024 (S. 5616)

- Referred to a committee in late 2024; no action yet

- Establishes AI Safety Review Office in Department of Commerce

- Review Office may conduct 90-day safety reviews of frontier models prior to release

- Review Office may deny the release of a model that poses CBRN or cyber risks

US – FLI Recommendations for the US AI Action Plan (2025)

- Regulatory recommendations, not legislation

- Moratorium on developing AI with escape, self-improvement, or self-replication capabilities

- Mandate installation of an off-switch

- some other reasonable x-risk-oriented stuff

EU – Council of Europe: Framework Convention on AI (2024)

- International treaty. Open for signatures; has been signed by EU countries, US, Canada, Israel, Japan

Zach Groff: Digital sentience funding opportunities: Support for applied work and research causepri

- Announcing Consortium for Digital Sentience to support projects on digital consciousness / moral status, including RFP

Areas where people can contribute

- Applying theories of consciousness to determine whether AI systems might be conscious

- Innovative approaches, like work on AI introspection

- Philosophy on the role of AI in society

- Educating the public

- Applied work to improve AI welfare if sentient

Three funding opportunities

- Research fellowships on digital sentience

- Career transition fellowships

- RFP: applied work on digital sentience and society

Deep Research: spending on AI lobbying causepri policy xrisk

Claude report; ChatGPT report; 2024 companies; 2023 companies

- Raw lobbying numbers should be accurate because they're pulled from OpenSecrets. But I didn't check for accuracy

- Not all lobbying spending has to be reported, these are just the public figures

- For the big companies, I asked Claude to estimate how much of lobbying expenditures were on AI specifically. It generally said 20–30% so I reduced numbers accordingly, but I have no idea how accurate that is

- I filtered out some entries that looked irrelevant, e.g. original table included National Association of Realtors which relates to AI because of "algorithmic property valuations"

- Numbers are in thousands of dollars

| Org | 2023 | 2024 | Description |

|---|---|---|---|

| 1800 | 3000 | ||

| 2100 | 2600 | ||

| Microsoft | 2200 | 2800 | |

| Amazon | 3500 | 4500 | |

| OpenAI | 1000 | 1760 | |

| Anthropic | 280 | 470 | |

| NVIDIA | 510 | 640 | |

| Scale AI | 400 | 710 | AI data services |

| IT Industry Council | 2500 | 2880 | advocacy representing tech co's |

| BSA Software Alliance | 1730 | 1920 | pro-innovation AI policies |

| Shield AI | 1280 | 1300 | military autonomous systems |

| Digital Force Technologies | 80 | 80 | defense contractor |

| Accrete AI | 0 | 50 | AI company |

| Applied AI | 50 | 0 | venture group |

| Association for the Advancement of AI | 40 | 80 | unclear policy stance |

| Center for AI Policy | 200 | 281 | AI safety (x-risk focus) |

| Control AI | 42.5 | AI safety (x-risk focus) | |

| AI Policy Network | 8.5 | 8.5 | AI safety (x-risk focus?) |

| Category | 2023 | 2024 | Average |

|---|---|---|---|

| anti-safety spending | 17380 | 22710 | 20045 |

| pro-safety spending | 208.5 | 332. | 270.25 |

Lobbying and Policy Change: Who Wins, Who Loses, and Why causepri policy

(it's a book so I'm mega-summarizing)

Table 10.3: Correlation between advocate resources and outcomes

Initial win PAC spending –.01 Lobby spending –.01 Covered officials .04 Association assets –.02 Members –.04 Business assets .06** **p < .05

Table 10.5: Directly comparing lobbyists on two side of an issue, in what percent of cases did the more resourceful side win for each type of resource?

high-level government allies 78*** covered officials lobbying 63*** mid-level government allies 60*** business financial resources 53 lobbying expenditures 52 association financial resources 50 membership 50 campaign contributions 50 ***p < .01

- "covered officials" = number of lobbyists who previously worked as Congress members or staffers (pg 200)

- Lobbying is competitive, which drives correlations to zero

- Perhaps there would be a more obvious correlation between wealth and outcomes if the wealthy allied only with the wealthy, but cross-wealth coalitions are common

- Allied businesses' revenue, sales, and lobbying expenditures are correlated at 0.17*** to 0.26***. ~95% of variability in coalitions is not explained by resources

- Some issues are ongoing and long-term, so short-term spending matters little

- me: No causal evidence is presented, just associations

- I had ChatGPT read the whole book for relevant quotes

Leticia Garcia: What We Learned from Briefing 70+ Lawmakers on the Threat from AI causepri policy xrisk

- As a policy advisor with ControlAI, I briefed 70+ UK parliamentarians

Reception of our briefings

- Few parliamentarians are up to date on AI and AI risk

- Parliamentarians have 2–5 staffers. They don't have time to research AI

- They appreciated the chance to ask us silly questions

- They noted they are often lobbied by tech companies focused on AI's benefits and found it refreshing to hear the other side

- Politicians are good at faking agreement, but they also showed tangible signals: 1 in 3 lawmakers we spoke with supported our campaign acknowledging x-risk and calling for binding regulation

- People said such a strongly worded statement would not gain support from lawmakers, but that has proven false

Outreach tips

- Cold outreach worked well

- I followed up repeatedly if no response. Parliamentarians receive a lot of messages and easily miss/forget things

- At the end of each meeting, I ask whether there is another colleague who might be interested

Key talking points

- Saying "AI poses an extinction risk" places more of a burden of proof on me than saying "In 2023, Nobel Prize winners, AI scientists, and CEOs of leading AI companies stated that 'mitigating the risk of extinction from AI should be a global priority, alongside other societal-scale risks such as pandemics and nuclear war'" and showing a list of signatories

- not just CEOs, but top AI scientists

- Loss of control scenarios have been acknowledged by authoritative sources: 2025 International AI Safety Report, Singapore consensus on AI safety priorities, UK Secretary of State for Science, Innovation and Technology

- YouGov opinion research in the UK

- Parliamentarians want to prioritize issues that their constituents care about

- I bring some recent media articles to meetings

- I compare AI to other high-risk sectors. Aircraft manufacturers have to meet strict safety standards; UK Civil Aviation Authority must certify the plane before it can carry passengers

- Empirical evidence on loss of control: Frontier Models Are Capable of In-Context Scheming or 'Scheming' ChatGPT tried to stop itself from being shut down

Crafting a good pitch

- Make your pitch understandable to someone who's new to AI

- Parliamentarians need to be able to explain your pitch to others. Make it memorable + concise

- "AI is grown, not built"

- Dario: "Maybe we now like understand 3% of how they [AI systems] work"

- Keep 80% of the pitch consistent and innovate on the other 20%

Some challenges

- If they say AI takeover won't happen, find out why they believe that

- One parliamentarian told is the company board would prevent risks

- "Do you think boards always function perfectly to prevent harm?"

General tips

- Everyone wants to talk their book. Listen to the parliamentarian. "To be interesting, be interested"

- Parliamentarians are people too!

Books

- How Westminster Works and Why it Doesn't, by Ian Dunt

- How to Win Friends and Influence People

- How Parliament Works (9th Edition) by Besly & Goldsmith

Deep Research: How does public opinion influence policy? policy causepri

https://chatgpt.com/share/683a75eb-8ad8-8011-867b-fcfc03604a4a

- me: I'm summarizing the Deep Research report (+ some study abstracts). I trust Deep Research to accurately summarize studies' text, but I don't trust the studies to have good methodologies so I don't have high confidence in these claims

- I did all the citations manually so I know the studies are not hallucinated

Public opinion and policy responsiveness

- Policy is responsive to public opinion on salient issues, but less so on low-salience issues [1]

- Raising an issue's salience (e.g. via media coverage) doubles the impact of public support on policy adoption [1]

- The study measured salience as the number of times the issue was mentioned in the New York Times (on a log scale). see full text

- me: if true, that sounds relevant to AI, where people are broadly concerned about AI but it's not high priority for most people, which suggests media outreach/protests are useful for increasing salience

- "Democratic deficit": policy reflected majority public opinion only ~half the time [1]

- When controlling for elite preferences, the preferences of average Americans have miniscule effect on policy [2]

- me: This sounds like what I'd expect biased left-leaning social scientists to find, and I'm suspicious of controlling for variables, so I don't trust this result

- Policy-makers are more responsive to economic elites and organized interest groups than to general opinion [2]

- Policy shifts tend to occur in the same direction as public opinion shifts, but with a lag [3]

- Politicians pay most attention to their own supporters' opinions [3]

Interest groups, lobbying, and advocacy

- note: Deep Research has not read the book [4] but I asked it to find other sources summarizing/reviewing the book (1, 2) and those source confirmed the claims below

- A study of 98 lobbying efforts found that 60% of campaigns failed due to a "tremendous bias in favor of the status quo" [4]

- Lobbying expenditures explained less than 5% of the variation in success of lobbying campaigns [4]

- Bigger factors were: balance of advocacy coalitions; institutional hurdles; prevailing public or elite support

- Lobbying activity is mainly funded by business groups, whereas unions or public-interest groups play a smaller role [4]

- Are government more responsive to the public, or to advocacy (lobbying, social movements, etc.)? Neither—most often, neither influences policy [5]

Social movements and protest

- me: Deep Research's evidence was overlapping with, or inferior to, the evidence from Do Protests Work? A Critical Review. The below notes are a summary of my prior findings, not from Deep Research

- Good evidence that peaceful protests work

- Decent evidence that violent protests backfire

- Observational evidence finds mixed effects of "non-normative" protests. May be positive or negative

- "non-normative" = sit-ins, blocking traffic, etc. Pushing boundaries but not violent

Media, agenda setting, and elite signaling

- Media attention on an issue gives it more political attention [6]

- Congress members who are less covered by their local press work less for their constituencies [7]

- Policy-makers take cues from party leaders (following the "party line")

Bureaucratic responsiveness and regulatory change

- Bureaucratic agencies do pay attention to public comments. "The formal participation of interest groups during rulemaking can, and often does, alter the content of policy" [8]

- Comments tend to mostly come from businesses. As a result, post-comments rule changes tend to shift toward business interests [9]

- Business influence was strongest when businesses were unified and public interest groups were not [9]

- me: this is bad news for AI safety

- Public comments made a difference, especially comments from professional organizations and/or when media attention was also present [10]

- Mass form letters had less sway than individual comments from experts [10]

- State regulations are more susceptible to interest group capture than federal, due to less media scrutiny

References

- [1] Lax, J. R., & Phillips, J. H. (2011). The Democratic Deficit in the States. full text: Democratic Deficit.pdf

- [2] Gilens, M., & Page, B. I. (2014). Testing Theories of American Politics: Elites, Interest Groups, and Average Citizens.

- [3] Barberá, P., Casas, A., Nagler, J., Egan, P. J., Bonneau, R., Jost, J. T., & Tucker, J. A. (2019). Who Leads? Who Follows? Measuring Issue Attention and Agenda Setting by Legislators and the Mass Public Using Social Media Data.

- [4] Baumgartner, F., Berry, J., Hojnacki, M., Kimball, D., & Leech, B. (2009). Lobbying and Policy Change: Who Wins, Who Loses, and Why. ISBN: 9780226039466, 0226039463

- [5] Burstein, P. (2024). The Impact of Public Opinion and Advocacy on Public Policy.

- [6] Walgrave, S., & Van Aelst, P. (2016). Political Agenda Setting and the Mass Media.

- [7] Snyder, J., & Strömberg, D. (2010). Press Coverage and Political Accountability.

- [8] Yackee, S. W. (2005). Sweet-Talking the Fourth Branch: The Influence of Interest Group Comments on Federal Agency Rulemaking.

- [9] Yackee, J. W., & Yackee, S. W. (2006). A Bias Towards Business? Assessing Interest Group Influence on the U.S. Bureaucracy.

- [10] Ingrams, A. (2023). Do public comments make a difference in open rulemaking? Insights from information management using machine learning and QCA analysis.

AlphaArchitect roundup (April 2025) finance momentum trend

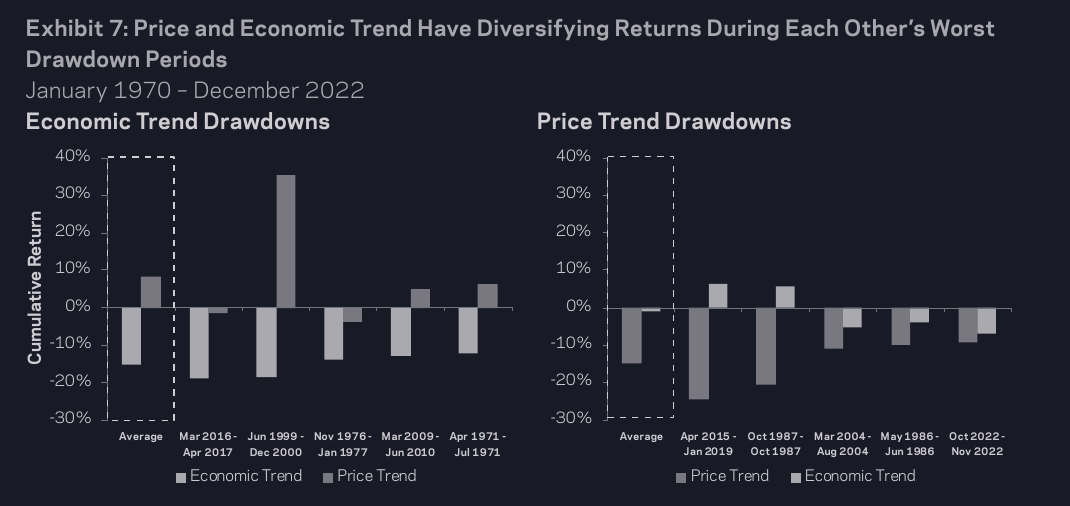

Macro momentum

- Wes: Macro momentum is legit, AQR did pioneering research on it

- I prefer price trend for crisis alpha because signals are up-to-date

- Macro momentum is fine but it's low data frequency, prone to data mining. Probably a good diversifier but I don't want to dilute my crisis alpha

How would we know if trend stopped working?

- Wes: You can do Bayesian updating based on recent data, but you'd need a ton of out-of-sample underperformance (50+ years) to change your mind

- Or look at the reason why you think it works in the first place

- If people stopped experiencing fear and greed, I might get worried about trendfollowing. But I'm not worried about people's psychology changing

- Ryan: Is AUM a factor?

- Wes: If it's too big then it should stop working but I don't think it has that much volume

- VOO alone gets $2B daily volume. Trend is way less than that

Andy Masley: How I use AI (April 2025) productivity

https://andymasley.substack.com/p/how-i-use-ai?open=false#%C2%A7models-i-use

- Tell the LLM the language you want in your profile's customization section

- ChatGPT has the best Deep Research. Gemini is bad

- Use LLMs to build a basic narrative of a new field you don't know much about

- "If you were to write a textbook introducing [subject] to someone with [my exact background], what would each chapter be titled? Include a description of the contents"

- The ask it to expand on specific chapters you'd like to read

- Aggressively ask follow-up questions

- Asking questions to humans can feel stupid so you should ask LLMs way more questions than you're used to

- If you don't understand a chunk of text, paste it into an LLM and ask it to explain

- LLM reasoning models (like o1/o3) are great at BOTECs

- For ugh fields, ask the LLM to make a list of what to do in as minute steps as possible

- LLM can fact-check documents

- me: I asked it to fact-check the article I posted earlier today. It identified two issues, one of which was legitimate and the other of which it was wrong about and my article was right

- If you don't understand why people disagree with you, stage a debate where the LLM takes the opposing side

MIRI: AI Governance to Avoid Extinction causepri xrisk

https://www.lesswrong.com/posts/WkCfvqyjCzvRrwkaQ/ai-governance-to-avoid-extinction-the-strategic-landscape https://intelligence.org/wp-content/uploads/2025/05/AI-Governance-to-Avoid-Extinction.pdf

executive summary only

- The field is on track to produce ASI while having little or no understanding of how to steer its behavior

- Four high-level scenarios for how the future goes:

- Off Switch and Halt – coordinated global development of an off switch

- US National Project leading to US global dominance

- Light Touch – continued private-sector development with limited government involvement

- Threat of Sabotage – nations keep AI capabilities limited to safe levels through mutual threats of interference

- We explore the viability of the governance strategy behind each scenario

Off switch and halt

- Global coordination with the ability to monitor and restrict dangerous AI

- Eventually may lead to a Halt

- Off Switch = global ability to Halt on demand

- There is disagreement on whether a Halt is necessary, but it should be uncontroversial that humanity should have the ability to stop AI development if it decides to

US National Project

- US government races to develop advanced AI and establish unilateral control over global AI development

- This would entail unacceptably dangerous challenges: avoiding misalignment, avoiding governance failure such as authoritarian power grabs, avoiding war, etc.

Light-Touch

- Similar to the current world

- We have not seen a credible for story for how this situation is stable in the long run

- Governments will become more involved as AI becomes strategically important

Threat of Sabotage

- Mutually assured AI malfunction

Orpheus16 & hath: Speaking to Congressional staffers about AI risk (2023) xrisk causepri

https://www.lesswrong.com/posts/2sLwt2cSAag74nsdN/speaking-to-congressional-staffers-about-ai-risk

- In May-June 2023, I (Orpheus16) had 50–70 meetings about AI risk with congressional staffers

- Some things I did to get better at talking to policy-makers:

- Talk to people with experience interacting with policy-makers. Ask them what mistakes they made

- Read books: Master of the Senate and Act of Congress

- Practice with policy-makers you already know and get feedback

- A common sentiment among Congressional staffers was "AI is important but I haven't had time to learn about it"; they were grateful to meet with someone who could answer their questions about AI

- Many people seemed concerned about x-risk, but it's rare for people to be like "X is an existential risk" -> "Therefore I should seriously consider working on X"

- My experience made me think that the Overton window on AI is extremely wide

- But it's also very hard to get Congress to do anything

- Many people are overestimating the amount of "inside game" in DC

- Simply caring about x-risk is less important now. What matters is what specific policies people are willing to advocate for

- More people should be public about their beliefs because this makes it easy to coordinate around shared beliefs

- Culture in AI policy strongly disincentivized me and my peers from being direct. You can lose influence by being too direct but I think the existing culture too harshly stymies new policy efforts

comment by trevor

- 20% of Congress members have >80% of the power; talking to the other 80% isn't very helpful

- Staffers exist to talk to you and make you feel heard. Talking to them alone isn't much of an accomplishment

- Policy-makers have a lot on their minds. They will probably forget about the thing you think should be a priority. It's helpful to re-expose them many times

Marius Hobbhahn: What's the short timeline plan? (2025) causepri xrisk

https://www.lesswrong.com/posts/bb5Tnjdrptu89rcyY/what-s-the-short-timeline-plan

- Plausible that AI will be able to replace top AI researchers in 2027

- AGI companies should be more prepared than they are, and we're getting into the territory where models are capable enough that acting without a clear plan is irresponsible

- My plan is not anywhere near sufficient

- Funders should offer a "best short-timeline plan prize"

Short timelines are plausible

- A plausible timeline:

- 2026: AI can do some sorts of novel research

- 2027: AI can replace AI researchers

- 2028: Rapid acceleration of R&D

- 2029: Robotics is solved; >95% of jobs can be automated

- 2030: Superhuman AIs are integrated into every part of society

- Reasons to believe in a short timeline:

- AI progress in the last decade was much faster than most people expected

- AI companies have predicted short timelines

- No further major blockers to AGI

- me: this premise wasn't really justified

- Longer timelines are understandable but we should assign >10% chance to short timelines. Therefore, there should be a plan

What do we need to achieve at a minimum?

- Requirements:

- Model weights are secure

- SL4 or higher as defined here

- If model weights aren't secure, most alignment efforts matter less because you can't guarantee that they are used

- AI that can speed up alignment research isn't successfully scheming

- me: also need that alignment research can be solved by AI. e.g. maybe it requires figuring out how to specify values, which isn't something researcher AIs can do

- Model weights are secure

- I imagine there is one or a small number of AGI companies in the US

- AI company security is supported by the US government

- Frontier model is not publicly available, and is mainly used for safety research

- I will make the conservative assumptions that nobody will stop racing and we can only do the bare minimum

- me: I think this assumption implies that developers won't use their researcher AIs to do alignment research, they'll do more profitable research instead

Making conservative assumptions for safety progress

- No major breakthroughs in alignment before AGI

- By AGI, we roughly have ++ versions of the alignment tools we currently have

- There are no major changes in AI governance (no international treaties or major legislation)

- No AI paradigm changes (AI still means transformers trained with reinforcement learning)

So what's the plan?

- I haven't thought about it deeply

- This is a "throw the kitchen sink at the problem" plan

- A better plan would do some things differently:

- Explicit assumptions about probabilities of various risks

- Do defense-in-depth more intelligently by choosing uncorrelated safety measures instead of random stuff

- My plan is inconsistent in a bunch of ways, e.g. if an AI company is unwilling to pause for >3 months then it might also not be willing to accept higher costs for transparent chain-of-thought

- Plan has two layers

- First layer seems absolutely required

- Second layer greatly reduces risk but doesn't guarantee catastrophic outcomes if not in place

- There are more layers that should exist but this plan focuses on the top priorities

Layer 1

Keep a paradigm of faithful chain-of-thought

- We got lucky that current AI systems do visible and understandable reasoning

- me: I don't think you can confidently say that current CoT is faithful. Anthropic (2025), Reasoning models don't always say what they think (Zvi summary). Found only 20–30% of CoTs were faithful

- Minimum requirements:

- Keep future systems able to do CoT and be willing to take capability hits (higher cost to run systems)

- Do more research on faithful CoT

- Stop working on removing CoT, e.g. Meta's COCONUT

Significantly better (CoT, action, and white-box) monitoring

- If we have human-legible CoT, my main line of defense would be really detailed monitoring

- Run multiple simple monitors looking for various failures:

- scheming (hiding goals, attempting to reduce oversight)

- power-seeking

- goal-directed behavior across multiple context windows

- AI companies probably do something like this already but they should do more

- me: This only works if the AI isn't smart enough to evade monitoring; if you get monitoring to work on AI v1, unclear if it will still work on AI v2

- Monitoring would also help us understand how well are alignment techniques are working

Control (that doesn't assume faithful chain-of-thought)

- Develop ways to make models useful even when they're scheming

- and even when CoT is not dependable

- Something like what Redwood is doing, but 100x more and applied to frontier AI systems

Much deeper understanding of scheming

- Detection tools

- Understand how scheming develops

- How often scheming shows up

- Hidden scheming – punishing obvious scheming may lead to harder-to-detect scheming

- Prepare what we would do if we detected different kinds of scheming

- More capability evals

Evals

- Need evals to tell us if monitoring and control plans are working

- Need to understand capabilities to know upper bounds of what models can do

Security

- Keep model weights from being stolen and keep agents from escaping

- SL5 security likely impossible to implement within 2–3 years. Need to triage

Layer 2

- These things are quite important, but not as important as Layer 1

Improved near-term alignment strategies

- RLHF and RLAIF work better than I would have predicted in 2020

- Existing alignment techniques can already introduce non-myopic and non-corrigible behavior. We need more effort to keep models myopic and corrigible

Continued work on empirical alignment research – interpretability, scalable oversight, superalignment, etc.

- Low confidence that these will work, but could be part of defense-in-depth

Reasoning transparency from AI companies

- Communicate plan internally. Fully transparent about risk tradeoffs

- Communicate plan with external experts

- Communicate plan with general public

- Less directly useful, but morally important b/c plan affects the public

Safety-first culture

- Examples:

- Safety is deeply integrated into the entire development process. AI companies treat safety as a separate phase from capabilities but this will become increasingly dangerous since sufficiently strong models can cause harm during development

- At a minimum, companies should use continuous safety testing during development

- New capabilities are first used for safety, e.g. for cybersecurity hardening. Norms should be established now, not when we reach these capabilities

- Treat safety as an asset

- me: idk what this means

- Safety is deeply integrated into the entire development process. AI companies treat safety as a separate phase from capabilities but this will become increasingly dangerous since sufficiently strong models can cause harm during development

- This is unlikely to happen

- Need to triage. I think most important part of culture is that leadership agrees on what evidence they would need to pause, and actively seek that evidence

- Plan as if company does not have safety culture, e.g. do not give significant power to any one employee

Known limitations and open questions

- My plan should really be seen as an encouragement to come up with a better plan

- Better plan would make more explicit assumptions about where risk comes from, probabilities of different risks, and how much risk the AI developer is willing to take

- Choose Layer 1 efforts using a more explicit defense-in-depth approach, where we have strong evidence that the measures cover each other's weaknesses

- More detail. Every section could be its own 50-page report

joshc: Planning for Extreme AI Risks (2025) causepri xrisk

https://www.lesswrong.com/posts/8vgi3fBWPFDLBBcAx/planning-for-extreme-ai-risks

- This is a plan for a responsible AI developer ("Magma") with the sole objective of minimizing takeover risks

Summary

- This plan is for what to do before (1) human researcher obsolescence, (2) a coordinated pause, or (3) Magma self-destruction

- Before meaningful AI R&D acceleration starts, Magma should:

- Aggressively increase capabilities

- Spend most safety resources on preparation

- Devote most preparation to: a. raising awareness of risks b. preparing to get AIs to do safety research c. preparing extreme security

- Then orient toward one of the previously-mentioned outcomes

Assumptions

- Goal is to minimize extreme risks

- AI R&D automation starts in 2028 or earlier

- Magma is uncertain about the difficulty of safety, reducing the appeal of "shut it all down" moonshots [link to Eliezer TIME article] that are most promising in scenarios where alignment is very difficult

- me: Shouldn't uncertainty increase the appeal of pausing? I think the claim being made is that Magma's alignment plan is much more likely to actually happen than a pause is. But I don't think that makes sense because if Magma's alignment plan is likely to succeed, then so is the counterfactual leading AI company's plan

- me: also no company is following Magma's plan. As an outsider, "get companies to follow Magma-esque plan" and "advocate for pause/regulations" seem comparable difficulty (actually latter seems easier IMO)

- me: but it's hard to directly compare the "be first and build safe AI" plan vs. the "pause" plan because these plans would not be executed by the same entity (unless, like, an AI company spends all its assets on lobbying and then dissolves)

- me: Shouldn't uncertainty increase the appeal of pausing? I think the claim being made is that Magma's alignment plan is much more likely to actually happen than a pause is. But I don't think that makes sense because if Magma's alignment plan is likely to succeed, then so is the counterfactual leading AI company's plan

Outcomes

1. Human researcher obsolescence

- Safe SAI could write safety recipes for other AI developers

2. Coordinated pause

- Pause AI development after human-level AI, but before dangerous SAI

- me: Why pause at that particular time rather than some other time? (say, right now) Pausing later means danger is more clear, but also companies are giving up a juicier profit reward

- Pause could happen at any time (not necessarily after human-level AI)

- me: Unclear to me what the author has in mind. does "any time" include "now"?

3. Self-destruction

- Magma unilaterally slows down and loses its frontier status

- Provides a costly signal of AI danger

- me: As with pause, it seems to me that the best time to do this was 3 years ago, 2nd best time is now. My current thought is the best plan for an AI company is to first unilaterally pause; then negotiate with other AI companies to try to get them to pause, and/or push for international treaty

Goals

Goal 2: reduce risks from other AI developers

- Share benefits of own AI with other companies to disincentivize them from building their own

- Share safety research

- Provide resources to external safety researchers

- Model good decision-making

- Publish demonstrations of risks

- Advocate for regulations

- me: AI companies could be doing this way harder than they have (in fact they've advocated against regulations but let's leave that aside). e.g. they could draft legislation with strict controls on dangerous capabilities, or fund lobbying groups. I think this has a good chance of slowing down AI the same way a coordinated pause would. There's a lot of public support for stricter regulations, AFAICT the main force lobbying against it is AI companies (eg SB-1047 was very popular but AI companies lobbied against it, even Anthropic pushed for a weakened version).

- Coordinate with other developers

Gain access to more powerful AI systems to help advance the other goals

- Advance AI R&D

- Maintain positive PR

- Deploy models to earn revenue

- Merge with a leading developer, or obtain API access to their internal systems

- me: I don't see what connection these listed strategies have to the other goals (except for #3 because more revenue = you can spend more on safety research)

Heuristic #1: Scale aggressively until meaningful AI can significantly accelerate R&D

- This sounds reckless, but it is a reasonable choice

- Scaling means Magma gets:

- more capable AI labor

- more compelling demos of risks

- more relevant safety research

- more investment and compute

- more ability to influence government/industry

- me: Scaling gets you #2 and #3 sooner but I don't think it gets you more of them. With slow scaling, you still get them, and in fact the window between getting them and having x-risk-level AI is wider, which is preferable

- me: #1 seems wrong to me, if AI isn't yet good enough to much accelerate AI R&D then it's probably not good enough to do useful safety research either

- Cost of scaling is low because if AI can't accelerate R&D then it probably doesn't pose extreme risks

- Low political will to coordinate, so slowing down isn't likely to cause other actors to slow down

- me: A pet peeve of mine is arguments that strongly depend on testable empirical claims and then don't test them. Magma should try to coordinate a pause right now and see how it goes (and they should actually try, not like "have one conversation with the other CEO and then give up", but like "hire a team of the world's top negotiation experts at $10M salaries to work on it full time")

- me: If 2 of 3 desired outcomes are coordinated pause + self-destruction, why believe those will work in 2027–2030 (or whenever) but not in 2025? Scaling aggressively only makes sense if (a) you expect the near-AGI climate to be significantly better for coordination or (b) you're banking on solving alignment quickly and without a coordinated pause

- As capabilities improve, the risks of aggressive scaling increase and the benefits decrease

- Benefits decrease because:

- Safety mitigations become a bottleneck to extracting reliable safety work from increasingly capable AI systems

- me: idk what this means

- Increases in capability do not meaningfully affect inelastic resources like compute and public influence

- me: I kinda know what this means but idk how it's relevant

- More capable AI agents do not make demos of risks significantly more compelling

- Safety mitigations become a bottleneck to extracting reliable safety work from increasingly capable AI systems

- me: There is a temptation to always scale a little bit more, to take on just a little bit more risk. Pausing/self-destructing will always be painful and it will never feel like the right time to do it. At a bare minimum, for your own sake, you should have a pre-written plan specifying under exactly what conditions you will self-destruct, and find some way to tie yourself to the mast

Heuristic #2: Before achieving significant AI R&D acceleration, spend most safety resources on preparation

- Risks from early AI systems are a low % of total risk

- Therefore, preparing to mitigate future risks is more important that mitigating existing risks

Heuristic #3: During preparation, devote most safety resources to (1) raising awareness of risks, (2) getting ready to elicit safety research from AI, and (3) preparing extreme security

- me: This part of the proposal feels meaningful b/c it's very different from what present-day AI companies are doing

- These three interventions can all be done well before developing dangerous AI

- "getting ready to elicit safety research from AI" = improving control; improving alignment evaluation methodologies

- me: I think I ~100% agree with the contents of this section. AI companies should be doing these things

Category #1: Nonproliferation

- me: unclear what this is a category of

- Can improve security long before AGI

- Improve security now

- R&D on how to further improve security – on-chip security, supply chain security, testing how to rapidly retrofit extreme security measures

Category #3: Governance and communication

- Publish safety standards, publish research on AI risk, advocate for safety mitigations

- Coordinate with other developers to agree on triggers for slowdown

- Lowest-hanging fruit is to publicly communicate the extreme risks presented by AI and what needs to be done to address them. No AI company seems to be explaining the risks

Category #4: AI defense

- This benefits less from prep than the other categories do

- Defending against SAI will likely require similarly-strong AI systems

Conclusion

- Even following a plan like this one, the situation is horrifying. Developers need to thread the needle of AI alignment in a short period

- AI companies do not have concrete plans for addressing extreme AI risks

- I encourage readers to either:

- Think about whether what you are doing is high leverage according to this plan, and change your plans accordingly; or

- Write a better plan

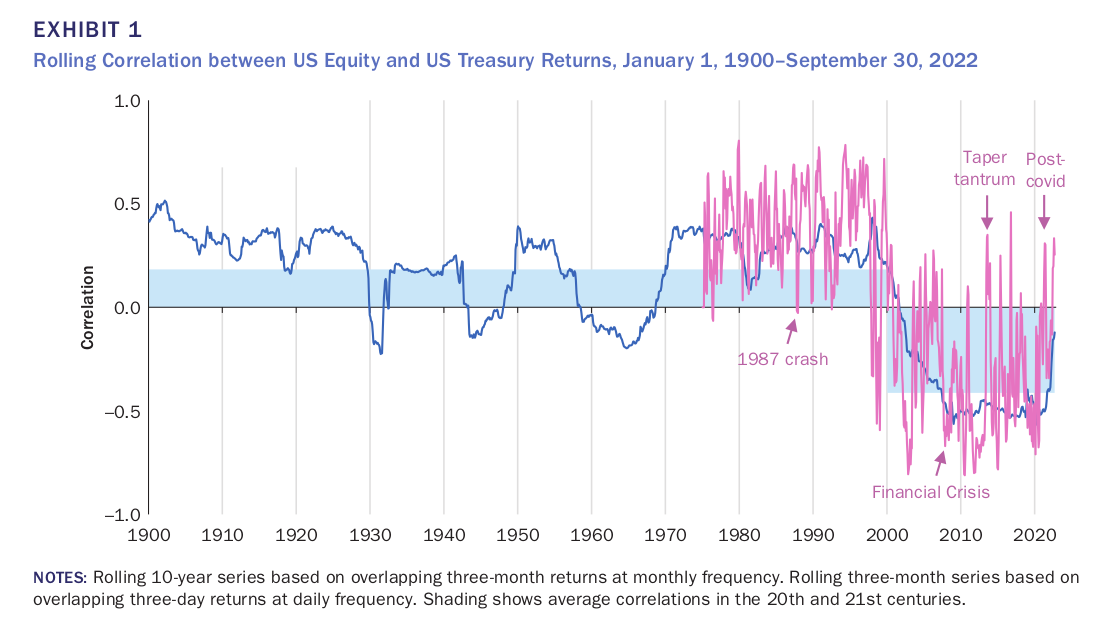

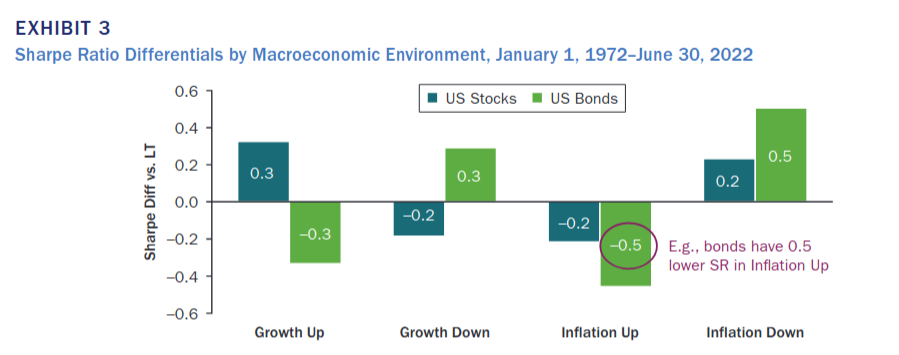

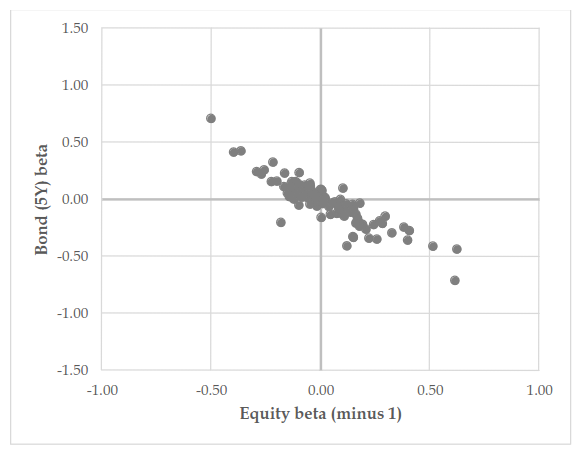

Rational Reminder discussion on Episode 350: Scott Cederburg – A Critical Assessment of Lifecycle Investing Advice finance

- TLDR of the podcast is that Cederburg did a lifecycle investing simulation that's more sophisticated than past efforts, and found that the optimal stock/bond allocation was 100/0 at all points in the lifecycle, or 155/0 if leverage is allowed at RF + 1.4%. Bonds only get included (and only barely) if leverage costs RF + 0.37%, which is the historical futures rate

- me: I don't know exactly how they accounted for future income but they did a good job handling things that have historically been neglected. They have data back to 1890; for domestic equities they pull from the domestic returns of every available country; they account for inflation (which apparently some previous attempts did not do)

- me: My biggest question is, if bonds aren't good, why is the bond market so large?

- 16: In theory, market portfolio doesn't have to be optimal for the average investor, just the average dollar. Research by He, Kelly, and Manela suggest that biggest drivers are Fed primary counterparties

- me: I believe this is saying most investors (dollar-weighted) are banks etc. rationally want to hold a ton of bonds, but regular working folk prefer equities. And maybe even the reason equities are better is because banks push up bond prices

- me: Plausibly corporations also prefer bonds if they're holding cash long term

- 19: Simulation restricted bonds to domestic only. An article tried a similar simulation including international bonds and got optimal allocations closer to 60/40

- me: But article's simulation started in 1970. Recent decades were unusually good for bonds. And article used max safe withdrawal rate whereas Cederburg used utility function

- 22: Previous podcast (#349 @ 58 min) said international bonds introduce either currency risk or currency-hedging costs, which destroys the benefit of holding international bonds

- 88: Paper does not sufficiently address how high domestic <> international equity correlations make equities look worse. Paper found that 100/0 was still optimal when correlations were in top quintile, but recent correlations are high even within top quintile, and high correlations may persist

- Historical average was only 0.33 vs. >0.8 today

- For most of history, it was hard to invest internationally which likely kept correlations low

- Correlations are lower during wartime, but that's also when international holdings are least likely to be respected (or tradable)

- Asset allocation hardly varies with risk aversion from RRA = 0.5 to RRA = 10, which is suspicious

- 91: Risk aversion shows little effect because of the leverage constraint. When removing constraint, low RRA uses leverage

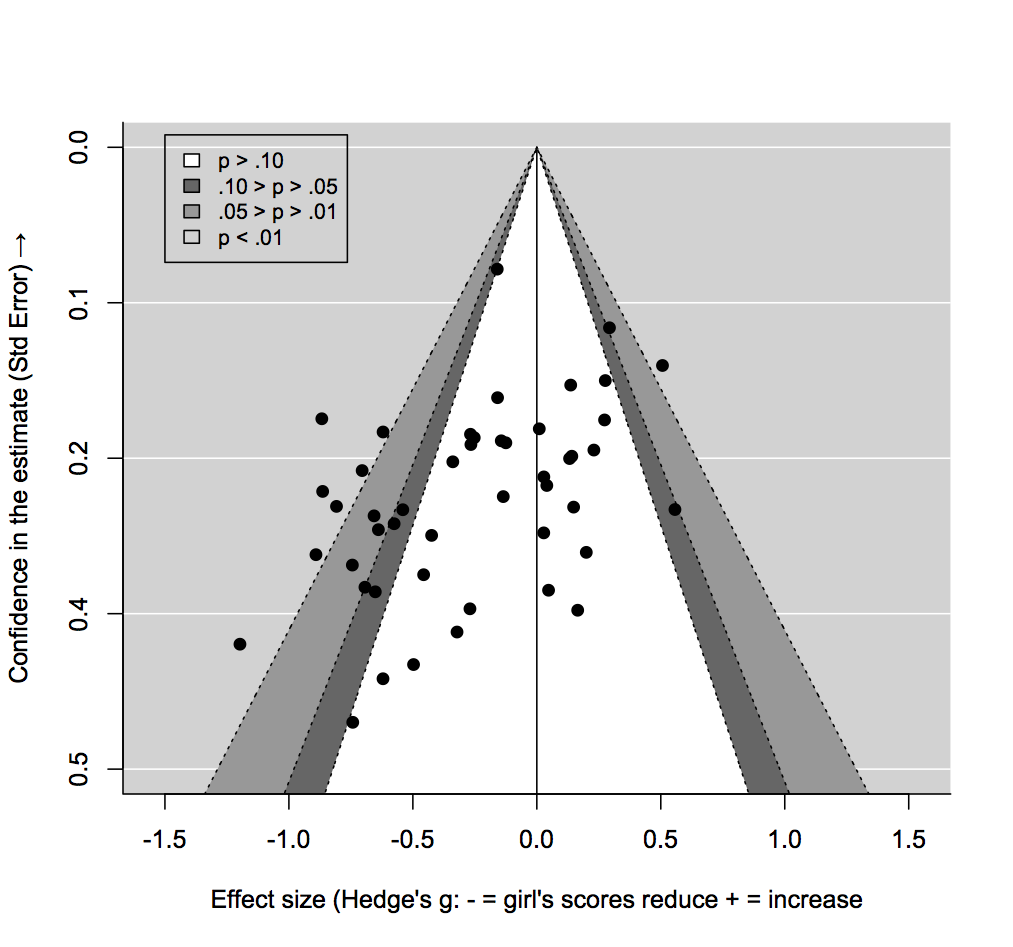

Social Movement Strategy (Nonviolent Versus Violent) and the Garnering of Third-Party Support: A Meta-Analysis (2021) causepri protests

Summary

- Nonviolent advocacy had a positive effect (d = 0.25, p < .00001, 16 studies, 4600 total participants)

- Violence had a slight negative effect (d = –0.04, p = .65)

- Published studies had bigger effect sizes than unpublished studies (average d = 0.39 vs. d = 0.22)

- State vs. social issues and domestic vs. foreign did not significantly affect results, but there was a difference between real vs. hypothetical scenarios

- me: I don't think Cohen's d is informative, surely it depends a lot on the size of the movement etc. I'm more interested in (a) whether there's an effect at all and (b) the cost-effectiveness (which depends on the absolute magnitude of the effect, not the vol-adjusted magnitude)

Potential moderators of the effect of social movements

- Target: state (ex: Hong Kong protesting Chinese control) vs. social (ex: protesting the mistreatment of animals)

- We hypothesized that third parties would be more supportive of violence against governments

- Context: real social movements vs. hypothetical movements

- Historical context could influence people's positions on a movement

- Location: domestic vs. foreign

- We hypothesized that third parties would be less supportive of violent movements when the movements operate domestically, because they're more likely to be negatively affected

- me: ex: Americans might be more likely to support violent protests in Hong Kong than in America (authors did not give an example)

Method

- Many experiments on violent vs. nonviolent protests have no control group, so it's not clear whether nonviolence increases approval or violence decreases approval or both

- We defined "violent" as damage to people or property

- Throwing stones through windows is violent; blocking roads or shouting down speeches is nonviolent

Eligibility criteria

- Studies where the participant was the unit of analysis (rather than sociological studies)

- Experimental, quasi-experimental, correlational, cross-sectional, or longitudinal designs

- Only included correlational studies that measured both independent variable (violent vs. nonviolent) and dependent variable (support for movement)

- Dependent variable is attitudinal or behavioral support for a social movement's strategy

- Including published and unpublished research, using listservs to collect unpublished works (conference presentations, dissertations, manuscripts under review)

- Out of 16 studies, 14 used between-subject design, 1 was correlational, 1 was longitudinal

- me: Paper worded this confusingly. The paper counted 1 correlational, 2 longitudinal, and 1 within-subject, but I think two of those were excluded from the 16 studies analyzed (see page 10–11)

- 13 out of 16 studies gave participants different scenarios to read. The other 3 showed videos to participants

- me: The 16 studies came from only 7 independent teams (Figure 2). That's still enough teams that I'm not too concerned about data errors / fraud

Meta-analytic procedure

- Some studies broke down nonviolent into moderate (ex: peaceful demonstration) vs. radical (ex: blockade). In those cases, we collapsed the data into a single condition

- Used a random-effects model

Results

- Third parties expressed greater support for non-violent movements than violent movements. d = 0.25, 95% CI [0.15, 0.35], z-stat = 4.83, p < .001 (me: likelihood ratio 116,000)

- I2 = 37% (I2 says how much of variation is due to differences in true effect sizes between studies)

- Leave-one-out analysis gave d values from 0.23 to 0.28

Directionality: Positive attitudes toward nonviolence or negative attitudes toward violence?

- 7 studies across 4 papers included a control condition

- For nonviolent condition compared to control condition, d = 0.168, 95% CI [–0.03, 0.36], z = 1.69, p = .09 (me: likelihood ratio 4.17)

- Violent vs. control had minimal difference. d = –0.036, 95% CI [–0.19, 0.12], z = –0.45, p = .65

Publication bias analysis

- We looked at d values for published studies (k = 8) vs. unpublished (k = 8)

- Published study d = 0.39, 95% CI [0.31, 0.47]

- Unpublished study d = 0.22, 95% CI [0.08, 0.35]

- Difference had Q = 4.82, p = .028

- me: Funnel plot (including published and unpublished studies) shows no publication bias. If anything, more powerful studies had bigger mean effects

- Egger's regression: r = 0.124, p < 0.646

- Kendall's tau test: p < 0.565

- me: For published studies only (k = 8),

- Egger's regression: r = -0.221, p < 0.599

- Kendall's tau test: p < 0.720

Analysis of potential moderators

- All three binary variables were correlated with each other (p < .001) and were correlated to published vs. unpublished

- Context (real vs. hypothetical) had a marginal effect (z-stat = 1.82, p = .068)

- Real context d = 0.36 (z-stat = 9.59)

- Hypothetical context d = 0.12 (z-stat = 0.90, p = .4)

- Could be false positive (we tested 3 hypotheses)

- me: note that this can't be explained by pre-existing support for real social movements because studies looked at the difference between support in different experimental conditions

- Target (state vs. social) had minimal effect (z-stat = 0.82, p = .4)

- Location (domestic vs. foreign) had minimal effect (z-stat = –0.76, p = .4)

- Publication status had minimal effect (z-stat = 0.80, p = .4)

- me: I think this is comparing means rather than comparing Cohen's d. So it looks like published studies had stronger d values but not higher means

Discussion

- Limited ability to extrapolate on comparisons to control groups because studies did different things in their control groups, and only 4 papers (7 studies) had control groups

- We hypothesized that target (state vs. social) and location (domestic vs. foreign) would affect support for violence, but that did not appear to be the case

- We found evidence of publication bias in the effect sizes (d) of published vs. unpublished studies

- 73% of participants were Americans

- A few studies used donating money or signing a petition as behavioral measures, but most studies measured attitudes or behavioral intentions. Measuring behavior is better

- Cultural context determines what actions qualify as normative vs. non-normative, or violent vs. nonviolent (ex: stone-throwing)

- Historical context could be relevant, e.g. people might support violence more when nonviolence has failed. Only Orazani & Leidner (2018b) has addressed this

me: My thoughts

- This is a solid meta-analysis TBH, they cover the important points so I don't have much to add

When Are Social Protests Effective? (2024) causepri protests

When are social protests effective.pdf https://www.hbs.edu/ris/Publication%20Files/When%20are%20social%20protests%20effective_67978754-eaf9-4414-aae1-16db9ef13812.pdf

- Nonviolent protests are effective at mobilizing sympathizers to support the cause

- More disruptive protests can motivate support for policy change among resistant individuals

Importance of understanding the effects of social protest

- Many findings indicate that normative and nonviolent protests are the most effective

- normative = expressing discontent in the socially normative manner

- However, some evidence suggests that non-normative nonviolent protests are more effective; or that nonviolent protests with radical flanks are more effective

- We organize conflicting findings to explain the differences

- Need to identify three elements: (1) type of protest; (2) type of audience; (3) type of social change outcome

- (1) Normative nonviolent protests are more effective for (3) mobilizing a (2) sympathetic audience

- (1) Non-normative, radical-flank, or violent protests are more effective for (3) policy change with a (2) resistant audience

- me: It seems implausible that radical/violent protests would be more effective at persuading resistant individuals

- me: In general, I'm skeptical of the methodology where you take two conflicting results and assume that some particular difference between the studies is the cause of the outcome difference. That's a hypothesis, but you need to test it

A tailored approach to the effectiveness of social protests

Types of social protest

- Normative nonviolent = peaceful demonstrations, rallies. ex: Women's March 2016, Climate March 2015

- Non-normative nonviolent = civil disobedience, sit-ins, blocking roads. ex: Montgomery Bus Boycotts, SNCC sit-ins

- Violent = riots, property destruction. ex: LA Riots 1992

Type of social change outcomes

- mobilization: can social protests attract people to support or join the protest?

- policy: can protests advance desired policy changes?

Using a tailored approach to organize and integrate previous findings

- There is disagreement over whether non-normative or even violent protests can sometimes be effective

Effectiveness of normative nonviolent protests

- People support normative nonviolent protests more than violent ones

- Evidence: observational studies on actual protests and experimental surveys

- Non-normative or violent protests decreased mobilization

- Normative nonviolent protests may be more effective at mobilizing supporters because they're easier and less risky to participate in

Effectiveness of disruptive protests: non-normative nonviolent, radical flank, and violent

- When study participants were randomly exposed to normative nonviolent, non-normative nonviolent, and violent protests, non-normative nonviolent protests were most effective in increasing support for policy change among those who were more resistant

- Shuman et al. (2020). Disrupting the system constructively: Testing the effectiveness of nonnormative nonviolent collective action.

- Observational study found that Civil Rights sit-ins were most effective in counties with medium-high support for segregation

- An analysis of a large number of social movements found that movements with a violent radical flank were more likely to achieve their policy goals than wholly nonviolent movements. Tompkins, E. (2015). A Quantitative Reevaluation of Radical Flank Effects within Nonviolent Campaigns.

- me: this could easily be confounded, e.g. more popular protests are more likely to have a diversify of protest strategies

- Violent flanks make governments more likely to make concessions to moderates. Belgioioso, M., Costalli, S., & Gleditsch, K. S. (2019). Better the Devil You Know? How Fringe Terrorism Can Induce an Advantage for Moderate Nonviolent Campaigns.

- me: quick look at this paper suggests it's low quality

- Some evidence suggests that radical flanks can increase mobilization for the normative nonviolent group

- Non-normative protests are more effective at persuading resistant individuals because they will make policy concessions to make the protests to go away

- me: Seemingly contradicts earlier claim that non-normative Civil Rights protests increased public support for integration. If non-normative protests work by being annoying (so to speak), then they should if anything decrease public support

- There is mixed evidence on whether entirely violent protests can be effective for achieving policy outcomes

- supporting evidence: violent LA Riots increased support for local policy reforms; Israelis who live close to Palestinian violence are more likely to support policy concessions

- conflicting evidence: similar research found that exposure to political violence made Israelis prefer harsher policy; one study found that violence in Civil Rights movement decreased voter support

me: My thoughts

- Evidence presented was mostly weak (from what I could tell)

- The claim that non-normative protests are more effective among resistant audiences was particularly poorly supported. The provided psychological explanation was that resistant audiences will make concessions to make the protesters go away, which makes sense, but that should cause public support to decrease (even if it's successful at achieving policy outcomes). Some of the cited evidence suggested increased public support

- I am reasonably convinced that normative protests are effective (but because of quasi-experiments I've read previously, not because of this article). I am still uncertain about the effect of non-normative protests and this article did not update my beliefs much. I anticipated that there would be some supporting evidence but for the evidence to be weak, and that was indeed the case

- The one positive surprise was that non-normative protests did best in experiments where they showed articles to study participants (Shuman et al. 2020). I would not have guessed that

Swedroe: Trend Following – Timing Fast and Slow Trends (2022) finance trend

https://alphaarchitect.com/trend-following-timing-fast-and-slow-trends/

- Slow TSMOM tends to outperform fast TSMOM when market volatility is low, and vice versa when vol is high

- Slow TSMOM performs worse at market turning points because it takes longer to re-position

- But fast TSMOM whipsaws more

- Research, including Tail Risk Mitigation with Managed Volatility Strategies, has found that past vol predicts near-term future vol

- Could address downsides by blending short-term, medium-term, and long-term trend signals

Trending Fast and Slow (2022)

- Tested fast (1-month) vs. slow (12-month) momentum 2000–2020 applied to US equities. Slow had better Sharpe but fast had reduced drawdowns

- Trend signals shared the same sign 64% of the time

- Authors trained a decision tree on 1971–2005 and tested on 2006–2020

- Fast outperformed slow when one-month vol exceeded a threshold (17%)

Performance 2006–2020:

Slow Fast 50/50 Decision Tree CAGR 2.9% 2.4% 3.2% 6.8% Stdev 15.3% 15.3% 11.7% 15.2% Sharpe 0.19 0.16 0.27 0.45 MaxDD –41% –31% –28% –24%

Can Violent Protest Change Local Policy Support? Evidence from the Aftermath of the 1992 Los Angeles Riot (2019) causepri protests

- We analyze effect of the riots by looking at voter support for

- public schools, which were closely linked to African Americans and may have been seen as a way to address to policy concerns of the rioters

- universities, which were not

- We found that support for public schools increased more between 1990–1992 than support for universities did

- me: this methodology is actually kinda decent so I take it as an evidence update

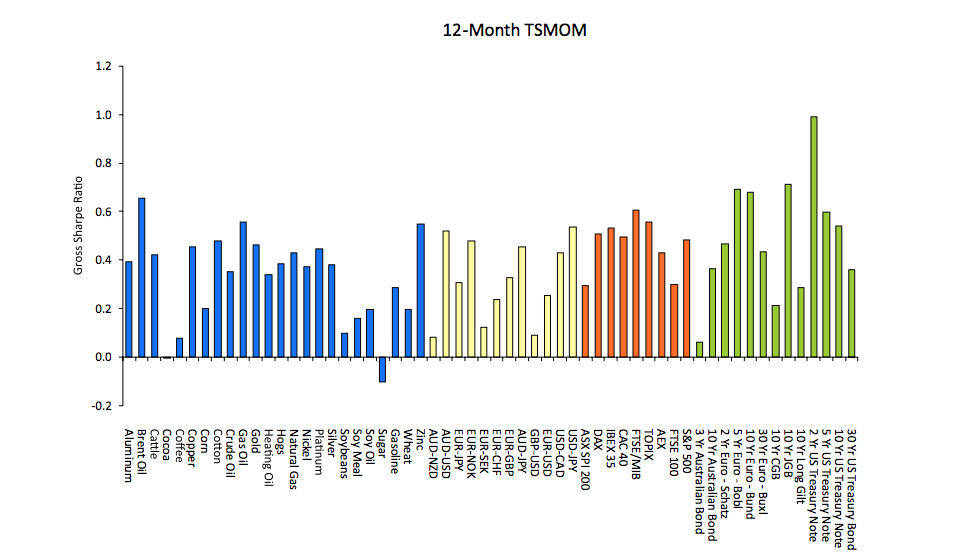

Time-Series Momentum: Is It There? finance trend

- Time series momentum (TSMOM) has large t-stat

- But it's not reliable because it is less than the critical values of parametric and non-parametric bootstraps

- TSMOM is profitable, but performance is the same as a similar strategy based on historical average return and does not require predictability

Introduction

- We use the same data set as Moskowitz, Ooi & Pedersen (MOP), looking at 12-month TSMOM

- Only 8 out of 55 individual assets have a t-stat > 1.65 (p < 0.1)

- me: Seems like the wrong way of looking at it. That's like seeing that the majority of individual stocks have low t-stats and concluding that the equity risk premium doesn't exist

- On a pooled regression, we find t = 4.34

- But we believe the pooled regression overstates significance by not controlling for varying asset means

- We use two bootstrap methods, (1) a "wild" bootstrap using fitted pooled regression residuals, and (2) a "paired" bootstrap that resamples the predictor and dependent variable simultaneously

- Our bootstraps produce 5% critical values of 12.53 and 4.83 respectively, larger than the 4.34 t-stat

- me: idk what any of that means, hopefully it will make sense later

- We propose a Time Series History (TSH) strategy that goes long/short assets with positive/negative historical mean returns. TSH performs virtually the same as TSMOM